As artificial intelligence (AI) and digital technologies become more integrated into the lives of aging populations, these innovations present incredible opportunities for enhancing health, independence, and social connection. However, they also raise significant ethical and privacy concerns, particularly as older adults may face increased vulnerabilities.

From virtual assistants to AI-powered health monitoring tools, the use of technology in senior care has grown rapidly in recent years. While these tools offer promising solutions to improve quality of life, it is essential to consider the ethical and privacy issues that accompany the implementation of such technologies. The key to ensuring older adults feel comfortable using AI-driven solutions lies in addressing these concerns—fostering trust, ensuring safety, and maintaining respect for privacy.

Globally, organizations like the World Health Organization (WHO) have also weighed in on how AI impacts aging populations. The WHO emphasizes the need to eliminate ageism from AI development and use in healthcare, ensuring that older adults are fairly represented in data and that AI tools are designed with their needs in mind. This involves not only legal but also ethical measures to prevent AI from exacerbating inequalities in healthcare for older adults World Health Organization (WHO).

The Growing Role of AI in Senior Care

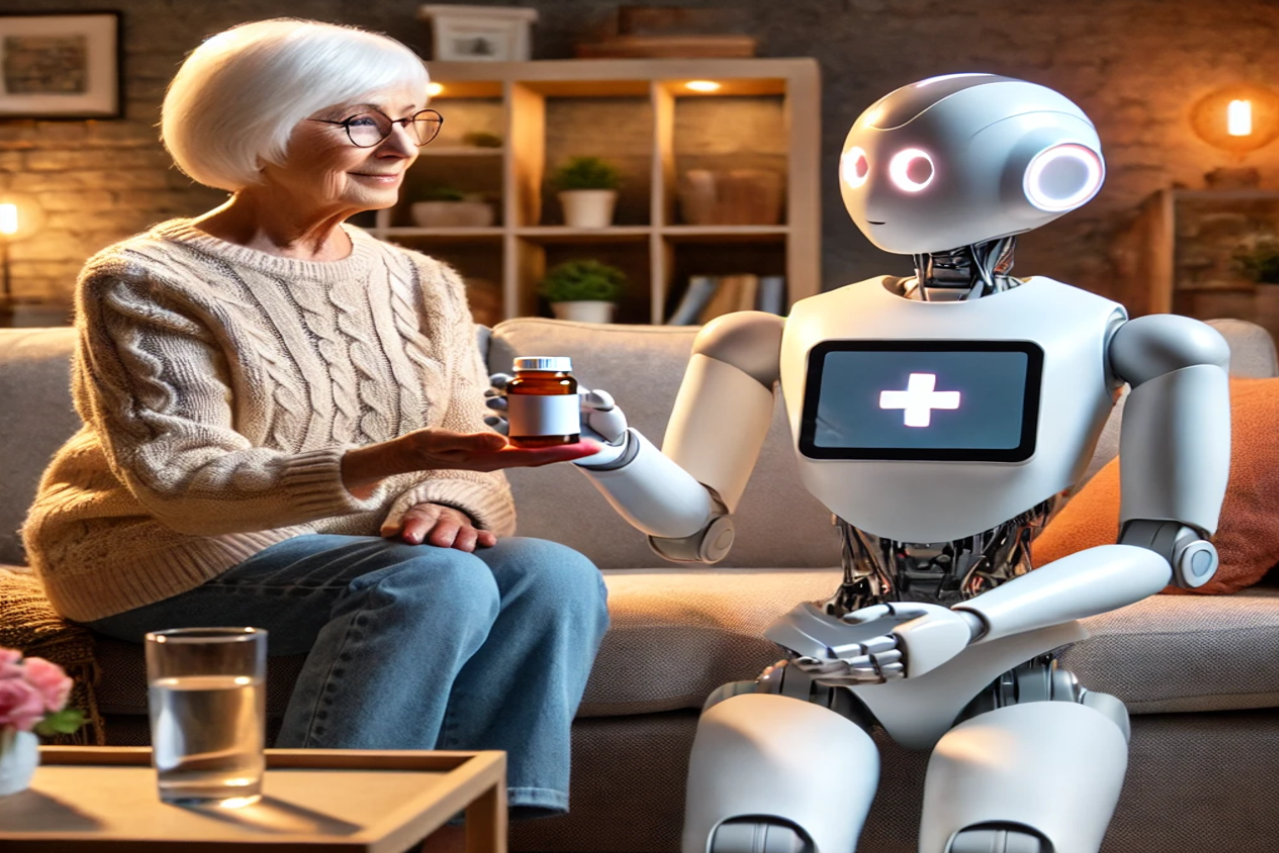

AI-powered technologies are rapidly being adopted in senior care, from wearable devices that monitor heart rate and detect falls to virtual assistants that remind users to take medication or schedule appointments. According to a 2022 study by the World Health Organization (WHO), the global population aged 60 years and older is expected to reach 2.1 billion by 2050, increasing the demand for AI and digital solutions that support aging adults. These technologies can provide critical support for maintaining independence, monitoring health conditions, and ensuring overall well-being.

However, with this growth comes the need for responsible use. As more data is collected from older adults through wearables, health apps, and AI-powered systems, concerns over privacy, security, and ethical data usage become paramount.

Ethical Challenges in AI for Aging Populations

Data Collection and Consent

AI systems require significant amounts of data to function effectively, especially in personalized healthcare. Older adults using health monitoring devices, for example, may have their vital signs, daily routines, and behaviors continuously tracked. The ethical challenge arises around obtaining informed consent—do older adults fully understand how their data will be used and who will have access to it?

Aging adults may be more vulnerable to misunderstandings or manipulation when it comes to digital consent. A study published in The Gerontologist (2023) found that 65% of older adults were not fully aware of how their personal data was being used by health technologies. This gap in understanding raises ethical concerns about transparency and ensuring users are empowered to make informed choices about sharing their information.

Best Practices:

- Providing clear, accessible, and easy-to-understand consent forms that cater to the cognitive and literacy levels of older adults.

- Offering personalized explanations (via AI or human agents) to ensure that users comprehend how their data will be used.

Bias and Fairness in AI Algorithms

AI algorithms are only as unbiased as the data they are trained on. If the data fed into these algorithms is not representative of the diversity found in older populations, AI-powered tools may produce biased outcomes. This is especially concerning in healthcare applications, where an algorithm’s decisions may affect a patient’s diagnosis or treatment plan.

For example, if an AI-powered diagnostic tool is trained primarily on data from younger adults, it may be less accurate when assessing conditions in older patients. This could lead to misdiagnoses or inappropriate care, further marginalizing already vulnerable populations.

Best Practices:

- Training AI algorithms on diverse, age-appropriate data sets to reduce bias and ensure fair outcomes.

- Regular audits of AI systems to identify and correct potential biases that may disproportionately impact older adults.

Loss of Human Autonomy

As AI becomes more integrated into the lives of older adults, there is concern about the potential loss of human autonomy. While AI can offer immense support, it should not replace human decision-making or caregiving altogether. Older adults may feel a loss of control if they perceive that AI is making decisions on their behalf without their full involvement.

For example, an AI system may automatically adjust a person’s medication schedule based on predictive analytics, but without clear communication, the individual may feel that their input has been overridden. Autonomy and dignity are critical in aging, and the over-reliance on AI could threaten these values if not managed carefully.

Best Practices:

- Implementing AI tools as supportive technologies that complement, rather than replace, human decision-making and care.

- Ensuring that older adults are fully involved in decisions made by AI and understand how to adjust or override system recommendations.

Digital Divide and Accessibility

One of the significant ethical concerns with the implementation of AI for older adults is the digital divide. Many seniors may lack access to the internet, smart devices, or digital literacy skills necessary to fully utilize AI technologies. According to a report from Pew Research (2021), 25% of adults aged 65 and older in the U.S. do not use the internet, which presents a substantial barrier to accessing AI-powered services.

If AI-driven solutions are only accessible to those with high digital literacy, there is a risk of exacerbating health disparities among older adults, particularly in low-income or rural communities.

Best Practices:

- Providing education and resources to improve digital literacy among older adults.

- Designing AI tools that are intuitive, easy to use, and do not require advanced technical skills.

US Federal AI Governance: In 2023, President Biden signed Executive Order 14110, which focuses on ensuring that AI systems are safe, secure, and equitable. This includes specific provisions for healthcare technologies, emphasizing the need for algorithmic fairness, data protection, and real-world monitoring of AI tools used in health settings. The order encourages developers to use representative datasets, particularly when creating AI tools for vulnerable groups, including older adults. It also highlights the importance of maintaining safety standards to protect the privacy and dignity of individuals using AI-driven healthcare technologies The White House IAPP.

Privacy Concerns with AI in Aging

Data Security

Older adults may be more vulnerable to data breaches or cyberattacks, as they may not be aware of how to protect their information. The sensitive nature of the data collected by AI-powered health devices—such as medical history, daily routines, and even location data—makes older adults prime targets for exploitation.

A 2023 survey by AARP found that 56% of seniors expressed concerns over their privacy when using technology. Ensuring robust data security measures is crucial to safeguarding the personal information of aging populations.

Best Practices:

- Encrypting sensitive health data and implementing two-factor authentication for AI tools.

- Regularly updating software to address vulnerabilities and enhance protection against cyber threats.

Monitoring and Surveillance

AI systems used in caregiving or health monitoring can sometimes lead to feelings of surveillance among older adults. Constant monitoring, even when done for safety reasons, can infringe on an individual’s sense of privacy and autonomy. For instance, wearables that track movement and send alerts in case of falls may be perceived as invasive by some users.

Finding a balance between the need for monitoring and respecting privacy is a delicate ethical challenge. Older adults should feel empowered to set boundaries and customize the extent of monitoring they are comfortable with.

Best Practices:

- Allowing users to customize their privacy settings, including choosing which data they are comfortable sharing and with whom.

- Ensuring transparency by explaining how monitoring data is used and providing clear options for opting in or out of specific features.

State-Level Legislation: Several states, such as California, Colorado, and Illinois, have enacted laws that specifically address AI-related data privacy and bias concerns. For instance, California’s legislation requires transparency in AI use and demands that developers assess systems for potential bias, ensuring that they do not discriminate against individuals based on age, race, gender, or other protected characteristics. This is especially relevant for aging populations, as AI systems used in healthcare could unintentionally perpetuate ageism if not properly audited The Council of State Governments –.

Building Trust in AI for Aging Populations

Addressing ethical and privacy concerns is key to fostering trust in AI among older adults. Trust is essential for successful adoption, especially for a population that may have less experience with digital technologies. By prioritizing transparency, respect for autonomy, and robust data security, AI developers can build solutions that not only improve the lives of aging populations but also instill confidence in the technologies they rely on.

Moving forward, it is critical that developers, policymakers, and caregivers work together to establish clear guidelines and ethical standards for the use of AI in senior care. With thoughtful implementation, AI has the potential to transform aging in a way that respects privacy, ensures fairness, and enhances the well-being of older adults.

While AI-driven solutions offer enormous potential for improving the quality of life for aging populations, they also present ethical and privacy challenges that must be addressed. Ensuring that AI technologies respect the autonomy, privacy, and dignity of older adults is crucial to building trust and ensuring widespread adoption. By incorporating best practices for data security, accessibility, and ethical AI design, we can create a future where technology truly supports the needs of seniors—empowering them to live more independent, connected, and healthier lives.

Are you interested in how AI is changing healthcare? Subscribe to our newsletter, “PulsePoint,” for updates, insights, and trends on AI innovations in healthcare.